Hi, I have a requirement to measure a voltage between 0-8.2V (approx) from an AC line which swings up to 100V. The ADC takes 1.024v.

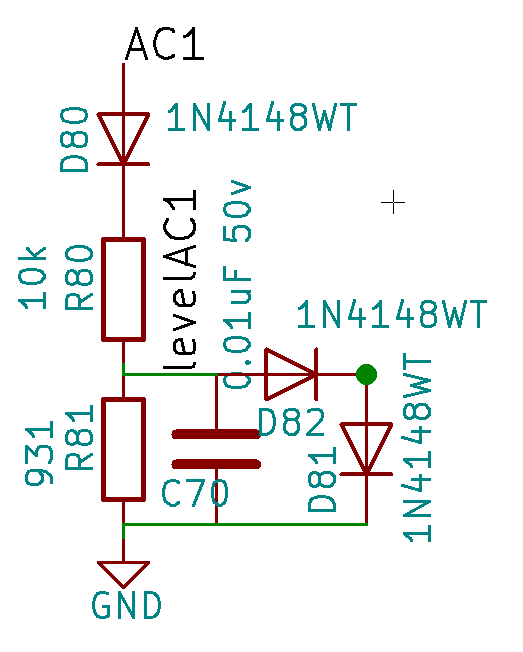

I came up with the following circuit:

The 10K is the highest the PIC recommends, the two diodes are a cheap way of ensuring the voltage doesn't rise above the PIC's. Everything withstands 1W. Resistors are 0.1 %. Resistor values are rough for now.

One area I haven't considered is the diode D80 and it's voltage drop, which I have to ensure is accurate across the current draw - this is 0 to 0.71mA.

While resistors have tolerance what's the diode equivalent? If there isn't one per say, which type of diode is the most suitable?

I came up with the following circuit:

The 10K is the highest the PIC recommends, the two diodes are a cheap way of ensuring the voltage doesn't rise above the PIC's. Everything withstands 1W. Resistors are 0.1 %. Resistor values are rough for now.

One area I haven't considered is the diode D80 and it's voltage drop, which I have to ensure is accurate across the current draw - this is 0 to 0.71mA.

While resistors have tolerance what's the diode equivalent? If there isn't one per say, which type of diode is the most suitable?

Last edited: