Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

But for how long? The TS stated he wanted to discharge his cells at 0.1C rate, that would mean that he is discharging 2500mAh cells. Read his post!Only 250mA? From little AAA Ni-Cad cells? Most of the AAA Ni-MH cells in my solar garden lights can produce 8A.

No, but per the original circuit posted in #1, the circuit is set up to maintain a constant voltage of 1V across the 4Ω source resistor. That implies that the drain current of the NFET is 0.25A and the gate of the NFET only needs to be Vth plus a small deltaV to make that happen.

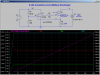

As long as the Vth of the NFET is 2V or less, then even a LM358 type opamp has enough positive drive to turn-on the NFET sufficiently. Here is a simulation using a power NFET with a Vth of 2V. The LM358 compares the voltage at the source V(s) to a 1V reference voltage. The output of the opamp makes V(g) whatever it needs to be in order to force 0.25A through R4, so that the voltage at the non-inverting input just matches the one at the inverting input.

I plot V(s) [green], V(g) [red], -I(V2) [the battery current, blue] and the dissipation in M1 [violet] as a function of V(Vb), which shows that the circuit can be used with any battery voltage from 1V to ~20V, provided you pay attention to heatsinking M1. This means that the circuit is usable with a single NiCd cell, although it will only discharge it down to 1V.

When discharging a multiple-cell NiCd pack, I would stop the test when the battery voltage drops to less than 1.05*n, where n is the number of cells. As a multiple cell pack ages, it is no better than the weakest cell, because as the first cell craters, the load current causes the voltage across that bad cell to reverse, rapidly reducing the pack total voltage.

View attachment 104864

which shows that the circuit can be used with any battery voltage from 1V to ~20V, provided you pay attention to heatsinking M1.

With the NFET I used in my simulation, the output pin of the opamp doesn't ever have to go above about 3.5V, regardless of how many cells are in the pack. The output pin of the opamp only has to get to V(s) + Vth + Δ, where Δ is a few hundred mV. V(s) is 1V, because 0.25A*4Ω = 1V....

In the event of trying to test my 9.6V, 10.8V and 12.0 V tool battery packs, I can't get the opamp output to go high enough.

P.S. I increased Vcc to 10V to get the 6 cell simulation.

In your simulation, the current from the battery is about 250mA for a single cell but the current (and the voltage to the gate of the opamp) are wrongly increased for a wrong battery current of about 1500mA for more battery cells. The battery current has increased because you wrongly increased the input voltage to about 7.6V that was supposed to stay at 1V. The battery cells are supposed to be in series, but do you have them in parallel instead? Do you understand the difference between series and parallel?

The independent variable in my simulation is the simulated battery voltage Vb (the horizontal plot X-Axis). The dependent variables I plotted are gate voltage V(g), source voltage V(s), Battery current -I(Vb) (which is flat regardless of Vb) , and Power dissipated (big expression) in the NFET (which increases linearly as the battery voltage increases..

I did a .DC analysis with a swept battery voltage Vb for all voltages from zero to 20V in steps of 0.1V. Yours looks like a .TRAN transient simulation which is not relevant.

I plot V(s) [green], V(g) [red], -I(V2) [the battery current, blue] and the dissipation in M1 [violet] as a function of V(Vb), which shows that the circuit can be used with any battery voltage from 1V to ~20V, provided you pay attention to heatsinking M1. This means that the circuit is usable with a single NiCd cell, although it will only discharge it down to 1V.

When discharging a multiple-cell NiCd pack, I would stop the test when the battery voltage drops to less than 1.05*n, where n is the number of cells. As a multiple cell pack ages, it is no better than the weakest cell, because as the first cell craters, the load current causes the voltage across that bad cell to reverse, rapidly reducing the pack total voltage.

Ah ha! I see wheat is missing from the DC analysis, and the comment of my bad simulations by Audioguru was the key. As Audioguru pointed out, I neglected to increase the load as I increased the setpoint and cell count. The DC analysis increases the cell count (Vb), but neither increases the setpoint (Vs=n) nor increases the load resistance (which compensates for the increase in setpoint voltage to maintain the 250mA current).

Correct. Then lots of Vs voltage is needed since there is no battery voltage sensing and disconnect circuit.From that, it appears the idea was to have Vs=1.*n cells so the batteries cannot be over-discharged. If Vs is not increased, then it is possible to drive a n-cell battery pack to a total output voltage of 1V and possibly destroy the cells.

Makes sense to me. To you?

From that, it appears the idea was to have Vs=1.*n cells so the batteries cannot be over-discharged. If Vs is not increased, then it is possible to drive a n-cell battery pack to a total output voltage of 1V and possibly destroy the cells.

Makes sense to me. To you?

I think it was intended as a two-for-one. The drawback is the limit on the number of cells that can be tested.Correct. Then lots of Vs voltage is needed since there is no battery voltage sensing and disconnect circuit.