Hi !

I'd like some help to debug my simulation, please.

I've probably made a mistake because when I launch the simulation, the calculation lasts hours instead of a few seconds.

I've been trying for hours to understand what is wrong but I still haven't found the reason of this problem.

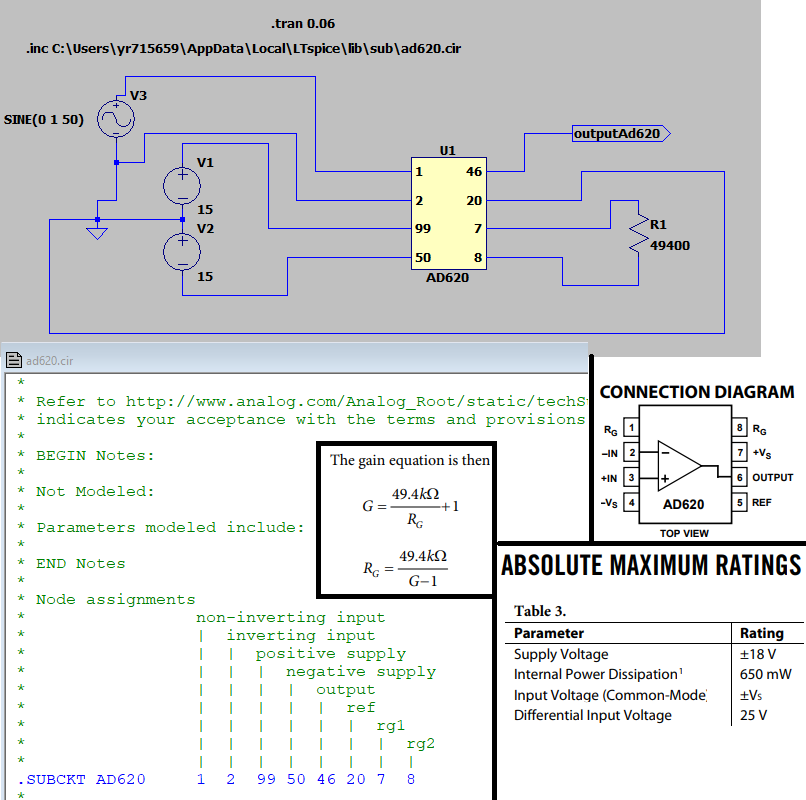

Here on the screenshot, I've used the following parameters :

signal input : sin wave of 1V amplitude, 0V DC and 50 Hz frequency ;

the gain is supposed to be G = 2 (1+ 49,4K/49400).

Thanks in advance.

I'd like some help to debug my simulation, please.

I've probably made a mistake because when I launch the simulation, the calculation lasts hours instead of a few seconds.

I've been trying for hours to understand what is wrong but I still haven't found the reason of this problem.

Here on the screenshot, I've used the following parameters :

signal input : sin wave of 1V amplitude, 0V DC and 50 Hz frequency ;

the gain is supposed to be G = 2 (1+ 49,4K/49400).

Thanks in advance.