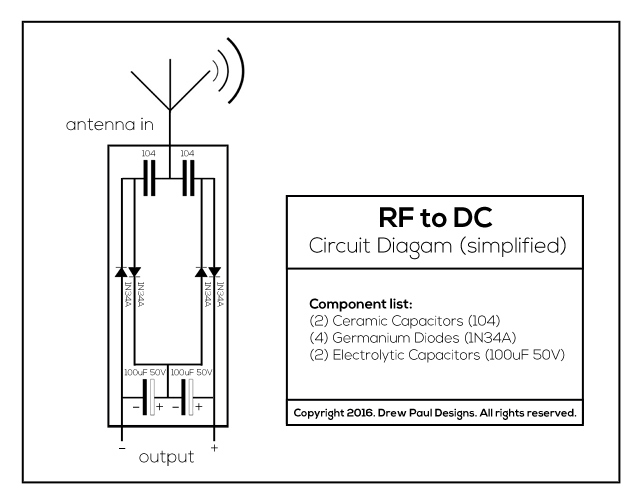

Hi there, I am just experimenting with a simple circuit that recharges 1.2V 3000mAh AA battery. But I have not idea what is the correct voltage to apply to the battery to recharge it. Do I need to apply 1.2 Volts? Maybe a little bit more? This circuit recharges the battery using induction, so depending the distance the circuit receives more or less voltage.