I just finished implementing the Black RNG. I used a cache of 3.2 million bits and iterated the cache 100 times with an engine recommended by Mr Black (10 cylinders + 1 hold).

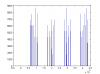

Attached is a histogram of the 100 000 x 32bit unsigned integers that was generated. Does not look too good for me. The smallest number generated was 11936.. above that the distribution looks quite even. But, I think I'll have to double check my code after Mr RBs results.

Edit: added the test result from Diehard Battery (blackout.txt) (80 million bits generated and tested).. and with a quick look I think the Black RNG passed all 15 test. I don't know what happened with the histogram I plotted. Have to check that later.

EDIT: I believe the histogram is correct. When I generate 16bit numbers, the histogram is quite uniform from 0 to 65535. But, when I generate 32bit integers the histogram is uniform only from 10000 to 2^32. Everything below 10000 is zero (the best case I was able to generate so far). So, there are some major issues with the Black RNG.

EDIT: More histograms and scatterplots. There seems to bee an obvious structure in the individual 32bit integers in the generator cache. I used 6400 bit cache with the same 17 cylinder engine Mr Black used in his example code. There was 50 passes between each number saved.

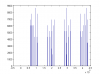

The "Histogram-of-first-32bits.png" shows the distribution of the first 32bits generated (50 passes between each number saved). Very horrible looking distribution for a RNG.

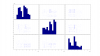

The "ScatterMatrix.png" shows the distribution of random 3-tuples generated.. The "3DscatterPlot.png" shows how the generated 3D points cluster on few spots in the 3D space.

So, my conclusion is, that even if the Black RNG passed the Diehard Battery with good results, the generated numbers still have a very strong structure in them. This structure is well hidden if the cache is very large i.e. million bits. But large cache won't remove the structure, it only interlaces multiple structured sequences together. The setup that Mr RB calls the "the worst case" is:

I also experimented with different amounts of passes of the cache. Even with 500 passes to the whole cache before saving the next generated number, there was practically no change in the generated pattern. Only thing affecting the pattern is the cache size. And that is equivalent to setting up multiple generators in parallel with different seeds.. effectively leading to different sets of structured sequences. All datasets were generated with the cache first filled with random numbers from a library RNG.

Attached is a histogram of the 100 000 x 32bit unsigned integers that was generated. Does not look too good for me. The smallest number generated was 11936.. above that the distribution looks quite even. But, I think I'll have to double check my code after Mr RBs results.

Edit: added the test result from Diehard Battery (blackout.txt) (80 million bits generated and tested).. and with a quick look I think the Black RNG passed all 15 test. I don't know what happened with the histogram I plotted. Have to check that later.

EDIT: I believe the histogram is correct. When I generate 16bit numbers, the histogram is quite uniform from 0 to 65535. But, when I generate 32bit integers the histogram is uniform only from 10000 to 2^32. Everything below 10000 is zero (the best case I was able to generate so far). So, there are some major issues with the Black RNG.

EDIT: More histograms and scatterplots. There seems to bee an obvious structure in the individual 32bit integers in the generator cache. I used 6400 bit cache with the same 17 cylinder engine Mr Black used in his example code. There was 50 passes between each number saved.

The "Histogram-of-first-32bits.png" shows the distribution of the first 32bits generated (50 passes between each number saved). Very horrible looking distribution for a RNG.

The "ScatterMatrix.png" shows the distribution of random 3-tuples generated.. The "3DscatterPlot.png" shows how the generated 3D points cluster on few spots in the 3D space.

So, my conclusion is, that even if the Black RNG passed the Diehard Battery with good results, the generated numbers still have a very strong structure in them. This structure is well hidden if the cache is very large i.e. million bits. But large cache won't remove the structure, it only interlaces multiple structured sequences together. The setup that Mr RB calls the "the worst case" is:

This, in my opinion, is the best case you can get. The whole (million byte) cache should be used as a sequence of random bytes. Then the whole cache should be "scrambled" with some passes with the engine.. and even then I wouldn't trust the data enough to use it in Monte Carlo applications.For the random data I just used the value in the Black RNG "cache", this is a worst case scenario as normally you would generate data on the fly as the engine progresses.

I also experimented with different amounts of passes of the cache. Even with 500 passes to the whole cache before saving the next generated number, there was practically no change in the generated pattern. Only thing affecting the pattern is the cache size. And that is equivalent to setting up multiple generators in parallel with different seeds.. effectively leading to different sets of structured sequences. All datasets were generated with the cache first filled with random numbers from a library RNG.