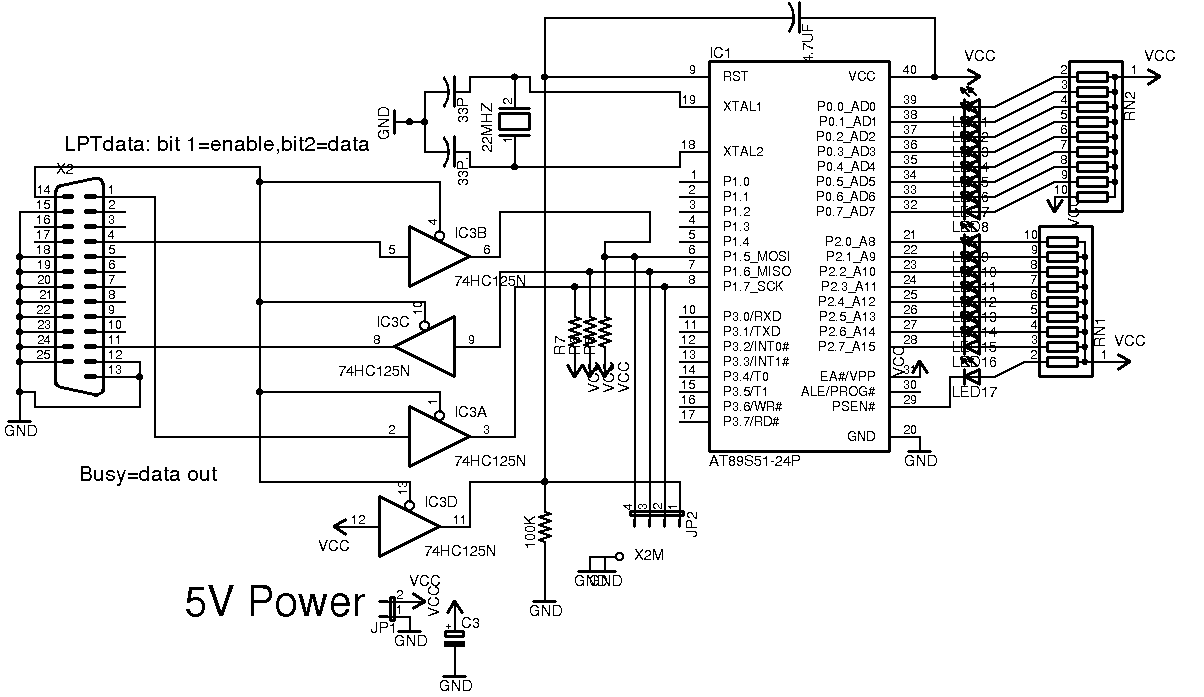

I made myself the followint circuit that is meant to program an AT89S52 chip serially using a PC parallel port:

Now I know the parallel port works fine because I used it with my other programmer so that isn't the issue. When I try to program the chip, I look at the leds (shown on the right) and the output isn't what it should be.

For example, if I only did the following code:

then all lights should go off after programming but they dont.

Now in my software end of things, I noticed that when I tried to verify the bytes after chip erasure, failure occurs at different locations. On one attempted erase execution, the validation successfully validated 126 out of 384 bytes and stopped at 127. On another, the erase only validated successfully at 11 bytes and stopped at 12.

In my program I made it where there is a 10 microsecond delay between the time that any pin changes state. This means that if all lines were low and I wanted to set data to be one and raise the clock then It would take 20uS. 10 to set the data, and 10 to raise the clock.

I also included a slow mode as well for anything that doesn't process consecutive bytes such as erase. For those operations, I put in a 2mS delay.

So what is the problem? Is my chip officially garbage or do I need a giant erase time or what?

Now I know the parallel port works fine because I used it with my other programmer so that isn't the issue. When I try to program the chip, I look at the leds (shown on the right) and the output isn't what it should be.

For example, if I only did the following code:

Mov P2,#0FFh

mov P0,#0FFh

sjmp $

then all lights should go off after programming but they dont.

Now in my software end of things, I noticed that when I tried to verify the bytes after chip erasure, failure occurs at different locations. On one attempted erase execution, the validation successfully validated 126 out of 384 bytes and stopped at 127. On another, the erase only validated successfully at 11 bytes and stopped at 12.

In my program I made it where there is a 10 microsecond delay between the time that any pin changes state. This means that if all lines were low and I wanted to set data to be one and raise the clock then It would take 20uS. 10 to set the data, and 10 to raise the clock.

I also included a slow mode as well for anything that doesn't process consecutive bytes such as erase. For those operations, I put in a 2mS delay.

So what is the problem? Is my chip officially garbage or do I need a giant erase time or what?