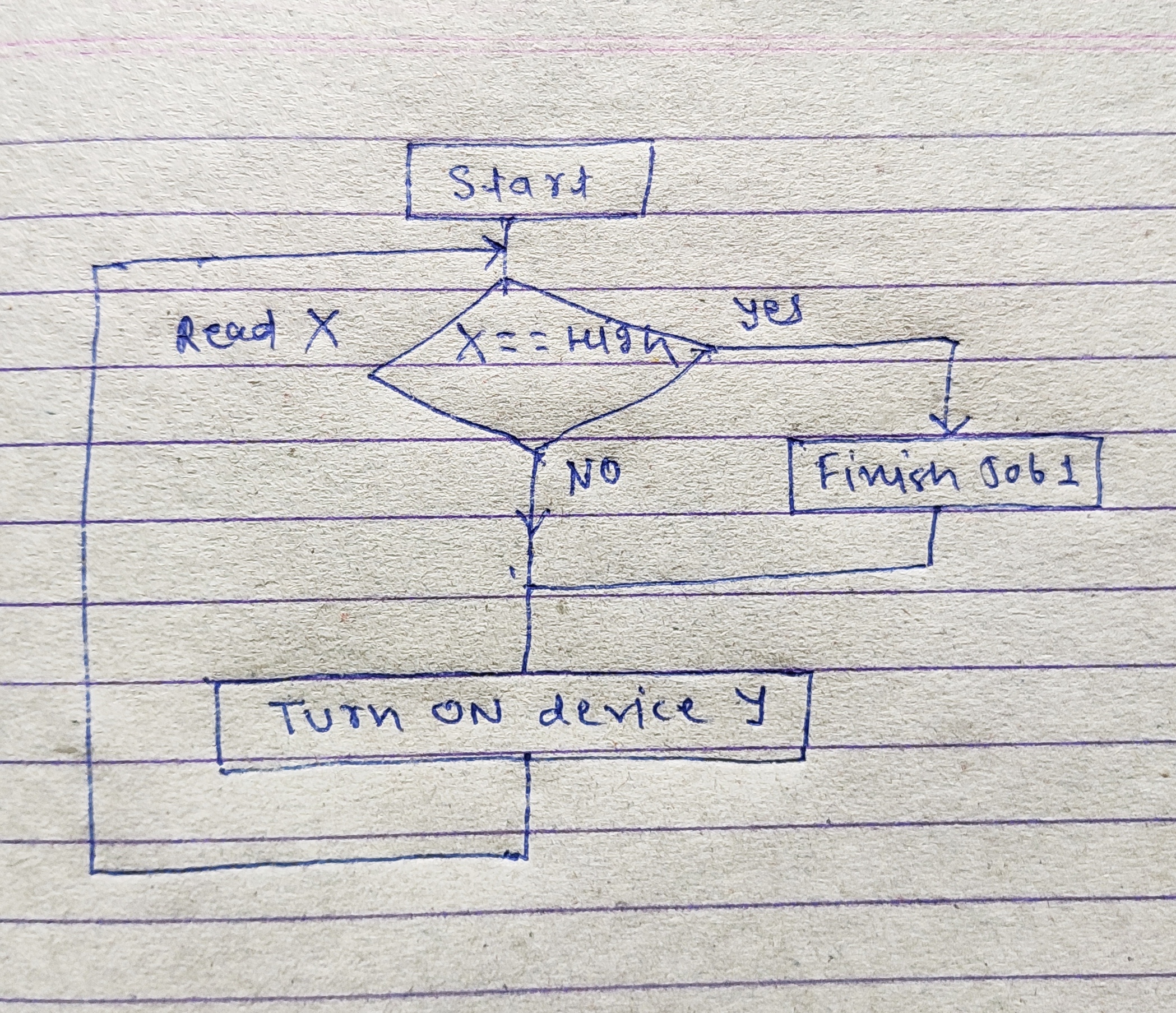

I have general questions, assuming we have PIC12F1840, Sensor X to detect object and Output device Y.

Task 1 ,When sensor detects object, micro read the output of sensor and finish first job

Task 2, Turn on device Y

Response time of X is 60 ms

Response time of Y is 30 ms

How much approximate time PIC micro take to complete two tasks?

Task 1 ,When sensor detects object, micro read the output of sensor and finish first job

Task 2, Turn on device Y

Response time of X is 60 ms

Response time of Y is 30 ms

How much approximate time PIC micro take to complete two tasks?